Then, click on one of the scroll bars to reactivate the highlighting of duplicates and follow the other steps as described.Ī requisite of this method is that for efficient de-duplication, page numbers should include both a start and an end page. Now, the Duplicate References group will be sorted by Page Numbers. Next, instead of clicking on the column heading “Pages” as is described in step 2, go to All References and then go back to Duplicate References. Before executing the steps in row C for older versions, go to All References and sort this group by Page Numbers. It will also work in earlier versions (versions X3 and higher) however, in older versions, step 2 in the third column of rows C and D in Table 1 will not work as desired because duplicate references are not highlighted. The method described in this paper is for the most recent version of EndNote for Windows, version X7.

We hope that EndNote will adopt some of Reference Manager's useful features, such as the option to regulate the amount of overlap in the title and other fields, and a comparison on start pages, albeit more robust than in Reference Manager. Additionally, Reference Manager is no longer for sale, and support for Reference Manager will likely be discontinued. The comparison of start page numbers in Reference Manager appears to be flawed therefore, tailoring by our method for Reference Manager is not a good alternative. However, when we tried our method in Reference Manager, it failed: too many false duplicates were removed. Reference Manager allows comparisons by start pages.

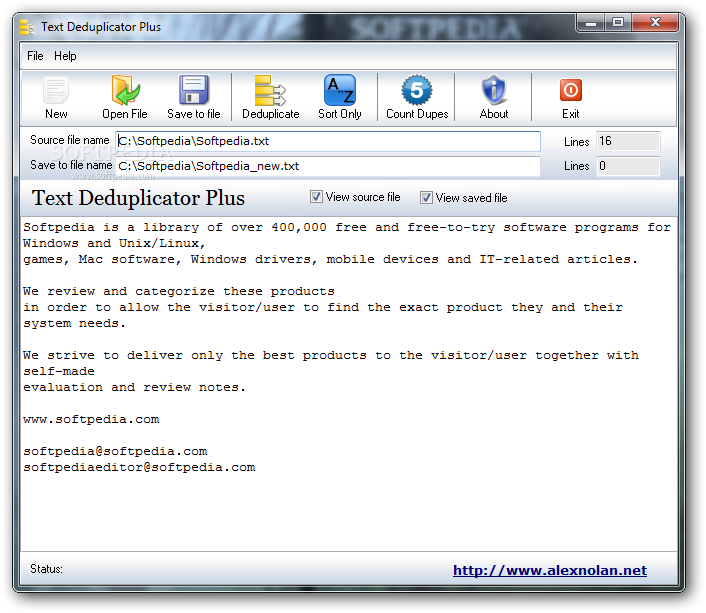

DEDUPLICATOR SOFTWARE SOFTWARE

The only alternative software to EndNote in which the fields that are to be compared in the de-duplication process can be changed is Reference Manager, also provided by Thomson Reuters. The limitation of this method is that it is tuned to EndNote however, EndNote is commonly used to manage bibliographic records.

DEDUPLICATOR SOFTWARE MANUAL

The last two steps require some additional manual assessment, but screening by page numbers expedites the work, and the number of references to assess is lower than in other methods. The next three steps require checking a small subset, in other words, the references that lack page numbers. The method's strength is based on the specificity of the first two steps, which require no manual assessment. To enhance efficiency and accuracy, the steps described here should be followed closely in the order presented and without omission. The time spent de-duplicating references and the error rate are significantly reduced because just a small subset of the search results has to be assessed manually. The steps may be somewhat challenging to master at first, but they become easy to carry out over time.

Most biomedical databases use a long format (e.g., 1008–1012), but two important databases (MEDLINE and the Cochrane Library) use an abbreviated one (e.g., 1008–12).Īlthough the de-duplication method that we designed for EndNote resembles procedures regularly carried out by other information professionals, it is more systematic, rigorous, and reproducible. However, this is complicated by variations in the way page numbers are stored. An alternative involves using pagination, because the often large page numbers in scientific journals, in combination with other fields, can serve as a type of unique identifier. Thus, they cannot be relied upon to identify duplicates. When they are present, they often cannot be exported easily. However, these identifiers are not present in every database. Unique identifiers for journal articles are digital object identifiers (DOIs) and PubMed IDs (PMIDs). A recent overview article compared existing software programs but found that none was truly satisfactory. In the authors' opinion, these methods are either very time consuming or impractical, as they require uploading large files to an online platform.

Several articles have been published recently on de-duplication methods. They typically remove duplicate records to reduce the reviewers' workload associated with screening titles and abstracts sometimes the reviewers remove the duplicates. When conducting exhaustive searches for systematic reviews, information professionals search multiple databases with overlapping content.

0 kommentar(er)

0 kommentar(er)